SEO in 2018: The Ultimate Guide to Rockin' It -

The year of 2017 was relatively calm for SEOs. But no matter how peaceful the current SEO landscape looks, it doesn't mean you can lean back in your chair and relax!

Voice search, artificial intelligence, and machine learning are among the top buzzwords of the past twelve months. While AI is more likely to affect PPC and advertising management, voice search is something that's going to disturb the SEO community in the nearest future. Google's communication became even more disappointing in 2017. The search giant has returned to its ‘black box' policy: webmasters detect major algorithm updates and the search engine is reluctant to comment or provide any details. E.g. here's what we've got from Gary Illyes about Fred:

"From now on every update, unless otherwise stated, shall be called Fred".

SEOs detected about 5 Google algorithm updates in 2017 and all we know about them are various sorts of rumors and speculations. So, to help you get to grips with the uncertainty, I've prepared a list of recommendations SEOs should focus on right now.

Chapters:

1 Be findable

2 Master Panda survival basics

3 Learn to combat Penguin

4 Improve user experience

5 Be mobile-friendly

6 Consider implementing AMP

7 Earn social signals — the right way

8 Revise your local SEO plan

The rule is simple — search engines won't rank your site unless they can find it. So, just like before, it is extremely important to make sure search engines are able to discover your site's content — and that they can do that quickly and easily. And here's how.

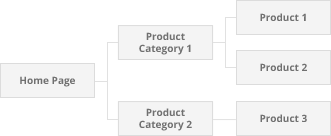

1. Keep a logical site structure

Good practice

Good practice- The important pages are reachable from the homepage.

- Site pages are arranged in a logical tree-like structure.

- The names of your URLs (pages, categories, etc.) reflect your site's structure.

- Internal links point to relevant pages.

- You use breadcrumbs to facilitate navigation.

- There's a search box on your site to help visitors discover useful content.

- You use rel=next and rel=prev to convert pages with infinite scrolling into paginated series.

Bad practice

Bad practice- Certain important pages can't be reached via navigational or ordinary links.

- You cram a huge number of pages into one navigation block — an endless drop-down menu or something like this.

- You try to link to each & every inner page of your site from your homepage.

- It is difficult for users to go back and forth between site pages without resorting to Back and Forward browser buttons.

An example of a logical site structure:

An example of a clean URL structure:

www.mywebsite.com/product-category-1/product-1

www.mywebsite.com/product-category-2/product-3

SEO PowerSuite tip:

Check site structure with WebSite Auditor

Check site structure with WebSite Auditor

2. Make use of an XML sitemap & RSS feeds

The XML sitemap helps search bots discover and index content on your site. This is similar to how a tourist would discover more places in an unfamiliar city if they had a map.

RSS/Atom feeds are a great way to notify search engines about any fresh content you add to the site. In addition, RSS feeds are often used by journalists, content curators and other people interested in getting updates from particular sources.

Google says: "For optimal crawling, we recommend using both XML sitemaps and RSS/Atom feeds. XML sitemaps will give Google information about all of the pages on your site. RSS/Atom feeds will provide all updates on your site, helping Google to keep your content fresher in its index."

Good practice

Good practice- Your sitemap/feed includes only canonical versions of URLs.

- While updating your sitemap, you update a page's modification time only if substantial changes have been made to it.

- If you use multiple sitemaps, you decide to add one more sitemap only if your current sitemaps have already reached the limit of URLs (up to 50 thousand per each sitemap).

- Your RSS/Atom feed includes only recently updated items, making it easier for search engines and visitors to find your fresh content.

Bad practice

Bad practice- Your XML sitemap or feed includes the URLs search engines' robots are not allowed to index, which is specified either in your robots.txt or the robots meta tag.

- Non-canonical URL duplicates are included into your sitemap or feed.

- In your sitemap, modification time is missing or is updated just to "persuade" search engines that your pages have been brought up to date, while in fact they haven't.

SEO PowerSuite tip:

Use XML sitemap builder in WebSite Auditor

Use XML sitemap builder in WebSite Auditor

3. Befriend Schema markup

Schema markup is used to tag entities (people, products, events, etc.) in your pages' content. Although it does not affect your rankings, it helps search engines better interpret your content.

To put it simple, a Schema template is similar to a doorplate — if it says 'CEO Larry Page', you know whom to expect behind the door.

If you are looking for some extra help with the Schema markup, check out the detailed Structured Data Implementation Guide from our team.

Good practice

Good practice- Review the list of available Schemas and pick the Schemas to apply to your site's content.

- If it is difficult for you to edit the code on your own, you can use Google's Structured Data Markup Helper.

- Test the markup using Google's Structured Data Testing Tool.

Bad practice

Bad practice- You use Schemas to trick search engines into believing your page contains the type of info it doesn't (for example, that it's a review, while it isn't) — such behavior can cause a penalty.

4. Leverage featured snippets

A featured snippet (also called a position 0 result) is a search result that contains a brief answer to the search query. The significance of position 0 is growing fast. The thing is that voice assistants will normally use featured snippets (if available) to respond to users' queries, and then cite the address of the website where the information is published. Featured snippets will pave your way to the answers provided by Amazon Echo or Google Assistant.

Any website has a chance to be selected for this sweet spot. Here are a few things you may do to increase your chances of getting there:

1) Identify simple questions you might answer on your website;

2) Provide a clear direct answer;

3) Provide additional supporting information (like videos, images, charts, etc.).

2) Provide a clear direct answer;

3) Provide additional supporting information (like videos, images, charts, etc.).

Panda is a filter in Google's ranking algorithm that aims to sift out pages with thin, non-authentic, low-quality content. Early in 2016, Gary Illyes announced that Panda had become a part of Google's core ranking algorithm. The bad news is that now you can't tell for sure if your rankings changed due to Panda or other issues.

1. Improve content quality

Good practice

Good practice- You need to create really useful, expert-level content and present it in the most engaging form possible.

- It's not obligatory that all pages of your website are like Wikipedia articles. Use your common sense and make sure that the overall image of your website is decent.

- There is no recommended text content length. A page should include as many words as it's necessary to cover the topic or answer the question of a user.

- If you have user-generated content on your website, make sure It's useful and free from spam.

- You block non-unique or unimportant pages (e.g. various policies) from indexing.

Bad practice

Bad practice- Your website relies on "scraped" content (content copied from other sites with no extra value added to it). This puts you at risk of getting hit by Panda.

- You simply "spin" somebody else's content and repost it to your site.

- Many of your site's pages have duplicate or very similar content.

- You base your SEO strategy around a network of "cookie-cutter" websites(websites built quickly with a widely used template).

SEO PowerSuite tip:

Use WebSite Auditor to check your pages for duplicate content

Use WebSite Auditor to check your pages for duplicate content

2. Make sure you get canonicalization right

Canonicalization is a way of telling search engines which page should be treated as the "standardized" version when several URLs return virtually the same content.

The main purpose of this is to avoid internal content duplication on your site. Although not a huge offense, this makes your site look messy — like a wild forest in comparison to a neatly trimmed garden.

Good practice

Good practice- You mark canonical pages using the rel="canonical" attribute.

- Your rel="canonical" is inserted in either the section or the HTTP header.

- The canonical page is live (doesn't return a 404 status code).

- The canonical page is not restricted from indexing in robots.txt or by other means.

Bad practice

Bad practice- You've got multiple canonical URLsspecified for one page.

- You've got rel="canonical" inserted into the section of the page.

- Your pages are in an infinite loop of canonical URLs (Page A points to page B, page B points to page A). In this case, search engines will be confused with your canonicalization.

SEO PowerSuite tip:

Use WebSite Auditor to check your pages for multiple canonical URLs

Use WebSite Auditor to check your pages for multiple canonical URLs

Google's Penguin filter aims at detecting artificial backlink patterns and penalizing sites that violate its quality guidelines in regards to backlinks. Penguin has become a part of the core Google algorithm. In its early days, Penguin would hit an entire website. Currently, it's more granular and it demotes particular pages that have "bad" links. Keeping your backlink profile natural is another key point to focus on in 2018.

Good practice

Good practice- Your website mostly has editorial backlinks, earned due to others quoting, referring to or sharing your content.

- Backlink anchor texts are as diverse asreasonably possible.

- Backlinks are being acquired at a moderate pace.

- Spam, low quality backlinks are either removed or disavowed.

Bad practice

Bad practice- Participating in link networks.

- Having lots of backlinks from irrelevant pages.

- Insignificant variation in link anchor texts.

SEO PowerSuite tip:

Check backlinks' relevancy with SEO SpyGlass

Check backlinks' relevancy with SEO SpyGlass

SEO PowerSuite tip:

Detect spammy links in your profile

Detect spammy links in your profile

Quite a few UX-related metrics have made their way into Google's ranking algorithm over the past years (site speed, mobile-friendliness, the HTTPs protocol). Hence, striving to improve user experience can be a good way to up your search engine rankings.

1. Increase site speed

There are quite a few factors that can affect page loading speed. Statistically, the biggest mistakes site owners make that increase page load time are: using huge images, using large-volume multimedia or other heavy design elements that make the site as slow as a snail.

Use Google's PageSpeed Insights to test your site speed and get recommendations on particular issues to fix.

SEO PowerSuite tip:

Optimize your pages' loading time with WebSite Auditor

Optimize your pages' loading time with WebSite Auditor

2. Improve engagement & click-through rates

The Bing and Yahoo! alliance, as well as Yandex, have officially confirmed they consider click-through rates and user behavior in their ranking algorithms. If you are optimizing for any of these search engines, it's worth trying to improve these aspects.

While Google is mostly silent on the subject, striving for greater engagement and higher click-through rates tends to bring better rankings as well as indirect SEO results in the form of attracted links, shares, mentions, etc.

3. Consider taking your site HTTPs

In August 2014, Google announced that HTTPs usage is treated as a positive ranking signal.

Currently there is not much evidence that HTTPs-enabled sites outrank non-secure ones. The transition to HTTPS is somewhat controversial, because

a) Most pages on the Web do not involve the transfer of sensitive information;

b) If performed incorrectly, the transition from HTTP to HTTPS may harm your rankings;

c) Most of your site's visitors do not know what HTTP is, so transferring to HTTPS is unlikely to give any conversion boost.

b) If performed incorrectly, the transition from HTTP to HTTPS may harm your rankings;

c) Most of your site's visitors do not know what HTTP is, so transferring to HTTPS is unlikely to give any conversion boost.

4. Get prepared for HTTP/2

HTTP/2 is a new network protocol that should replace the outdated HTTP/1.1. HTTP/2 is substantially faster than its predecessor. In terms of SEO, you would probably be able to gain some ranking boost due to the improved website speed.

On December 19, 2016 Google gave the green light to HTTP/2 usage, with Google Webmasters tweeting:

"Setting up HTTP/2? Go for it! Googlebot won't hold you back."

There were no further details. However, the tweet assumes that Googlebot should support HTTP/2 from now on. It's worth reading through the later discussion (dated May 2, 2017) about HTTP/2 between John Mueller and Bartosz Góralewicz, from which you can find out that "Googlebot can access sites that use HTTP/2", and it's too early to drop the support of HTTP/1.1 yet.

At the time of writing, about 83.18% of web browsers can handle HTTP/2. You can keep track of HTTP/2 adoption by browsers on "Can I Use". In fact, if your website runs on HTTPS with ALPN extension, you can already switch to HTTP/2 because most browsers already support it through these protocols.

The number of mobile searches has finally exceeded the number of desktop searches. The inevitable has happened; Google has begun experiments with mobile-first index. It means that their algorithms will primarily use the mobile version of a site's content to rank pages from that site, understand structured data, and show snippets from those pages in the results. It doesn't mean that your website will drop out of the index if it has no mobile version — Google will fall back on the desktop version to rank the site. However, if you stick to mobile-unfriendly design, user experience and rankings may suffer. After almost a year from the announcement of mobile-first index, it seems it is still in a sandbox and it's not likely to be rolled out soon.

Good practice

Good practice- Your website utilizes responsive design.

- Your page's content can be read on a mobile device without zooming.

- You've got easy-to-tap navigation and links on your website.

- Your mobile website has high-quality content.

- Structured data is available both on the mobile and desktop version of the website.

- Metadata is present on both versionsof the website.

Bad practice

Bad practice- You are using non-mobile-friendly technologies like Flash on your webpages.

- The mobile version of the website lacks metadata, schema markup, quality content, and in general is inferior to the desktop version.

SEO PowerSuite tip:

Use the mobile-friendly test in WebSite Auditor

Use the mobile-friendly test in WebSite Auditor

Accelerated Mobile Pages project (AMP for short) is a new Google initiative to build a better, more user friendly mobile Web by introducing a new "standard" for building web content for mobile devices. Basically, this new standard is a set of rules that form a simple, lighter version of HTML. And pages built in compliance with AMP are sure to load super-quick on all mobile devices.

According to Google's VP of Engineering David Besbris:

- AMP pages are 4x faster, and use 10x less data compared to non-AMP pages;

- On average AMP pages load in less than one second;

- 90 percent of AMP publishers experience higher CTRs;

- 80 percent of AMP publishers experience higher ad viewability rates.

It seems that publishers are not happy with the over-minimalistic AMP specs. 2017 saw gradual improvements to the functionality and looks of AMP pages, and even more is to come soon (check out the roadmap in the linked blog post).

The official AMP Project help pages are the best starting point for those who want to try the new technology out.

AMP is a strictly validated format, and if some elements on your page do not meet the requirements, Google will most likely not serve this page to users. So after building your AMP page, check if it passes the validation.

SEO PowerSuite tip:

Manage all AMP pages in WebSite Auditor

Manage all AMP pages in WebSite Auditor

Search engines favor websites with a strong social presence. Your Google+ posts or Tweets can make it to Google organic search results, which is a great opportunity to drive extra traffic. Although the likely effect of Twitter or Facebook links on SEO hasn't been confirmed, Google said it treats social posts (that are open for indexing) just like any other webpages, so the hint here is clear.

Good practice

Good practice- You attract social links and shares with viral content.

- You make it easy to share your content: make sure your pages have social buttons, check which image/message is automatically assigned to the post people share.

Bad practice

Bad practice- You are wasting your time and money on purchasing 'Likes', 'Shares' and other sorts of social signals. Both social networks and search engines are able to detect accounts and account networks created for trading social signals.

SEO PowerSuite tip:

See your site's social signals in SEO PowerSuite

See your site's social signals in SEO PowerSuite

Local SEO has always been about reviews, consistent NAP, and basic SEO best practices. No much to add here. The research from 2017 by Local SEO Guide just proves my words. The research also mentions ‘engagement' as an important ranking factor. I.e. Google expects you to interact with your customers. Among other things, remember to respond to your reviews and update your listing with fresh photos, opening hours, etc.

Good practice

Good practice- Make sure your website mentions your business name, address, phone (NAP) and that this information is consistentacross all the listings.

- Optimize your Google My Business listing: NAP, correct category, photos, reviews are the priority.

- Create incentives to get more positive reviews and citations across the web.

- Common SEO activities, such as on-page optimization and link-building.

Bad practice

Bad practice- You are using a false business address.

- Your website is listed in a wrong Google My Business category.

- There are reports of violations on your Google My Business location.

SEO PowerSuite tip:

Check website authority in SEO PowerSuite

Check website authority in SEO PowerSuite

What's coming in SEO in 2018?

Here are the main SEO trends I predict for 2018:

SEO remains part of multi-channel marketing

Customers can find your business through social, paid search, offline ads, etc. Organic search is an integral part of a complex path to conversion. Just be aware of these other channels and get savvy in additional spheres, if necessary.

Customers can find your business through social, paid search, offline ads, etc. Organic search is an integral part of a complex path to conversion. Just be aware of these other channels and get savvy in additional spheres, if necessary.

Enhancements in artificial intelligence

Thanks to RankBrain (the algorithm based on artificial intelligence) Google has become smart. It now also looks at synonyms, your niche connections, location, understands entities, etc. to see if you fit the bill (=query). It looks like Google is actively moving in the direction of predictive and personalized search experience.

Thanks to RankBrain (the algorithm based on artificial intelligence) Google has become smart. It now also looks at synonyms, your niche connections, location, understands entities, etc. to see if you fit the bill (=query). It looks like Google is actively moving in the direction of predictive and personalized search experience.

Voice search is gaining momentum

According to Forrester, in 2018, there will be around 26.2 million households with smart speaker devices in the U.S. alone. If the trend keeps on in future, we will definitively evidence a big shift in the way people consume information and interact with the search engines. It's both a challenge and an opportunity for SEOs.

According to Forrester, in 2018, there will be around 26.2 million households with smart speaker devices in the U.S. alone. If the trend keeps on in future, we will definitively evidence a big shift in the way people consume information and interact with the search engines. It's both a challenge and an opportunity for SEOs.

No quick results, no easy SEO

With enhancements in artificial intelligence Google widened its net for spam ‐ and became even better at detecting sites with low-quality content or unnatural link patterns.

With enhancements in artificial intelligence Google widened its net for spam ‐ and became even better at detecting sites with low-quality content or unnatural link patterns.

Traffic stays on Google increasingly

Google has definitely stepped up its effort to provide immediate answers to people's searches. And, with the increasing number of featured snippets, this tendency for stealing traffic from publishers will likely increase. More to that, it's featured snippets that are often cited by voice assistants in response to queries.

Google has definitely stepped up its effort to provide immediate answers to people's searches. And, with the increasing number of featured snippets, this tendency for stealing traffic from publishers will likely increase. More to that, it's featured snippets that are often cited by voice assistants in response to queries.

Paid search expansion

A few years ago, Google changed Google Shopping's organic model to pay-per-click. In 2016 paid ads in Local Search came around. The trend will likely persist and we'll experience more native-like ad formats in the near future.

A few years ago, Google changed Google Shopping's organic model to pay-per-click. In 2016 paid ads in Local Search came around. The trend will likely persist and we'll experience more native-like ad formats in the near future.

soursc: go to.

SEO in 2018: The Ultimate Guide to Rockin' It -

![]() Reviewed by Yasser fa

on

January 05, 2018

Rating:

Reviewed by Yasser fa

on

January 05, 2018

Rating:

No comments: